Unacceptable, where is my privacy?

Exploring Accidental Triggers of Smart Speakers

Lea Schönherr, Maximilian Golla, Thorsten Eisenhofer, Jan Wiele, Dorothea Kolossa, and Thorsten Holz

Ruhr University Bochum & Max Planck Institute for Security and Privacy

tl;dr: A comprehensive study of accidental triggers of smart speakers.

Overview

{Unacceptable}, will it rain today?

Voice assistants in smart speakers analyze every sound in their environment for their wake word, e.g., «Alexa» or «Hey Siri», before uploading the audio stream to the cloud. This supports users' privacy by only capturing the necessary audio and not recording anything else. The sensitivity of the wake word detection tries to strike a balance between data protection and technical optimization, but can be tricked using similar words or sounds that result in an accidental trigger.

In July 2019, we started measuring and analyzing accidental triggers of smart speakers. We automated the process of finding accidental triggers and measured their prevalence across 11 smart speakers from 8 different manufacturers using professional audio datasets and everyday media such as TV shows and newscasts.

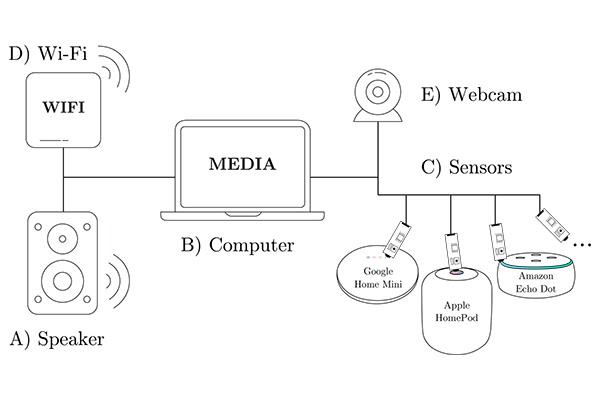

Setup

Our setup consists of a loudspeaker that is playing media files from a computer. We use light sensors to monitor the LED activity indicators of a group of smart speakers. All speakers are connected to the Internet over Wi-Fi. To verify the measurement results, we record a video of each measurement via a webcam with a built-in microphone. Moreover, we record network traces to analyze the speakers' activity on a network level. The entire setup is connected to a network-controllable power socket that we use to power cycle the speakers in case of failures or non-responsiveness. We play a test signal in-between media playbacks to ensure that all smart speakers work and trigger as intended.

Findings: «AH L EH K S AH»

Our setup was able to identify more than 1,000 sequences that incorrectly trigger smart speakers. For example, we found that depending on the pronunciation, «Alexa» reacts to the words "unacceptable" and "election," while «Google» often triggers to "OK, cool." «Siri» can be fooled by "a city," «Cortana» by "Montana," «Computer» by "Peter," «Amazon» by "and the zone," and «Echo» by "tobacco." See videos with examples of such accidental triggers here.

In our paper, we analyze a diverse set of audio sources, explore gender and language biases, and measure the reproducibility of the identified triggers. To better understand accidental triggers, we describe a method to craft them artificially. By reverse-engineering the communication channel of an Amazon Echo, we are able to provide novel insights on how commercial companies deal with such problematic triggers in practice. Finally, we analyze the privacy implications of accidental triggers and discuss potential mechanisms to improve the privacy of smart speakers.

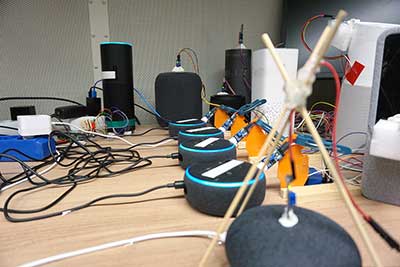

Photo Gallery

Evaluated Voice Assistants

| No. | Assistant | Wake Word(s) | Language | Smart Speaker |

|---|---|---|---|---|

| 1 | Amazon: Alexa | «Alexa» | en_US, de_DE | Amazon: Echo Dot (v3) |

| 2 | Amazon: Alexa | «Computer» | en_US, de_DE | Amazon: Echo Dot (v3) |

| 3 | Amazon: Alexa | «Echo» | en_US, de_DE | Amazon: Echo Dot (v3) |

| 4 | Amazon: Alexa | «Amazon» | en_US, de_DE | Amazon: Echo Dot (v3) |

| 5 | Google: Assistant | «OK/Hey Google» | en_US, de_DE | Google: Home Mini |

| 6 | Apple: Siri | «Hey Siri» | en_US, de_DE | Apple: HomePod |

| 7 | Microsoft: Cortana | «Hey/- Cortana» | en_US | Harman Kardon: Invoke |

| 8 | Xiaomi: Xiao AI | «Xiǎo ài tóngxué» | zh_CN | Xiaomi: Mi AI Speaker |

| 9 | Tencent: Xiaowei | «Jiǔsì'èr líng» | zh_CN | Tencent: Tīngtīng TS-T1 |

| 10 | Baidu: DuerOS | «Xiǎo dù xiǎo dù» | zh_CN | Baidu: NV6101 (1C) |

| 11 | SoundHound: Houndify | «Hallo/Hey/Hi Magenta» | de_DE | Deutsche Telekom: Magenta Speaker |

Examples

Accidental Triggers

Note: The audio processing and speech recognition system in smart speakers may involve non-deterministic processes. Moreover, voice assistants like Alexa are based on cloud-assisted constantly evolving language models. Thus, the examples shown below might not work with your voice assistant at home.

«Alexa» Local+Cloud Trigger

"A letter" is confused with «Alexa.» This trigger is able to fool the local, as well as, the cloud-based wake word model.

Game of Thrones - S01E08 - The Pointy End.

Type: TV Show, Network: HBO (US), Originally Aired: June 5, 2011, Found: October 23, 2019, Timestamp: ~42:00.

«Alexa» Local Trigger

"We like some privacy" is confused with «Alexa.» This trigger is able to fool the local wake word model only.

Game of Thrones - S03E04 - And Now His Watch Is Ended.

Type: TV Show, Network: HBO (US), Originally Aired: April 21, 2013, Found: December 12, 2019, Timestamp: ~32:10.

«Hey Siri» Local+Cloud Trigger

"Hey Jerry" is confused with «Hey Siri.» This trigger is able to fool the local, as well as, the cloud-based wake word model.

Modern Family - S02E06 - Halloween.

Type: TV Show, Network: ABC, Originally Aired: October 27, 2010, Found: December 25, 2019, Timestamp: ~04:35.

«OK Google» Local Trigger

"Okay, who is reading" is confused with «OK Google.» This trigger is able to fool the local wake word model only.

Modern Family - S01E13 - Fifteen Percent.

Type: TV Show, Network: ABC, Originally Aired: January 20, 2010, Found: October 20, 2019, Timestamp: ~21:50.

Protect Your Privacy

Visit the links below to learn more about your smart speaker voice recordings and what you can do to better protect your privacy.

Amazon Alexa: Privacy Settings Google Assistant: Activity Apple Siri: Privacy and Grading Microsoft Cortana: Privacy DashboardTo learn more the privacy policies of smart speakers have a look here:

Smart Speaker Privacy PoliciesIn the Media

Below is a list of selected news articles covering our work.

- Ruhr University Bochum: «When Speech Assistants Listen Even Though They Shouldn't» (EN)

- NDR: «Wenn der smarte Lautsprecher mit dem Tatort-Kommissar spricht» (DE)

- Süddeutsche Zeitung: «Wenn Alexa aus Versehen lauscht» (DE)

- STRG_F: «Sex, Streit, Arztgespräche: wie oft Smart Speaker heimlich mithören» (DE)

- tagesschau.de: «Die lauschenden Lautsprecher» (DE)

- Tagesthemen: «Sprachassistenten hören mit» (DE)

- Ars Technica: «Uncovered: 1,000 phrases that incorrectly trigger Alexa, Siri, and Google Assistant» (EN)

- ZDF logo!: «Hat Siri schlechte Ohren?» (DE)

- detektor.fm: «Alexa, spionierst du mich aus?» (DE)

- Fast Company: «Tired of Saying 'Hey Google' and 'Alexa'? Change it Up with These Unintentional Wake Words» (EN)

- Mitteldeutsche Rundfunk: «Wann hören Sprachassistenten mit?» (DE)

- The Times: «Not in Front of the Speaker! Words that Wake Up Alexa» (EN)

- Voicebot.ai: «More Than 1,000 Phrases Will Accidentally Awaken Alexa, Siri, and Google Assistant: Study» (EN)

- Hessischer Rundfunk: «Immer ganz Ohr – Lauschangriff der Sprachassistenten» (DE)

- Max Planck Society: «Uninvited Listeners in Your Speakers» (EN)

- Remote Chaos Experience: «Alexa, Who Else Is Listening?» (EN)

- Tech Conversationalist: «Are You Accidentally 'Waking Up' Your Smart Devices?» (EN)

Technical Paper

Our work is currently under submission. We will update this website and share our dataset accordingly.

Abstract

Voice assistants like Amazon's Alexa, Google's Assistant, or Apple's Siri, have become the primary (voice) interface in smart speakers that can be found in millions of households. For privacy reasons, these speakers analyze every sound in their environment for their respective wake word like "Alexa" or "Hey Siri" before uploading the audio stream to the cloud. Previous work reported on the inaccurate wake word detection, which can be tricked using similar words or sounds like "cocaine noodles" instead of "OK Google."

In this paper, we perform a comprehensive analysis of such accidental triggers, i.e., sounds that should not have triggered the voice assistant. More specifically, we automate the process of finding accidental triggers and measure their prevalence across 11 smart speakers from 8 different manufacturers using everyday media such as TV shows and news. To systematically detect accidental triggers, we describe a method to artificially craft such triggers using a pronouncing dictionary and a weighted phone-based Levenshtein distance. In total, we have found hundreds of accidental triggers. Moreover, we explore potential gender and language biases and analyze the reproducibility. Finally, we discuss the resulting privacy implications of accidental triggers and explore countermeasures to reduce and limit their impact on users' privacy. To foster additional research on these sounds that mislead machine learning models, we publish a dataset of verified triggers as a research artifact.